Harmonic Motion

Embodying the Chinese Guzheng Instrument through Classic Ballet Movements with Machine Learning

YUTING XUE

ELKE REINHUBER∗

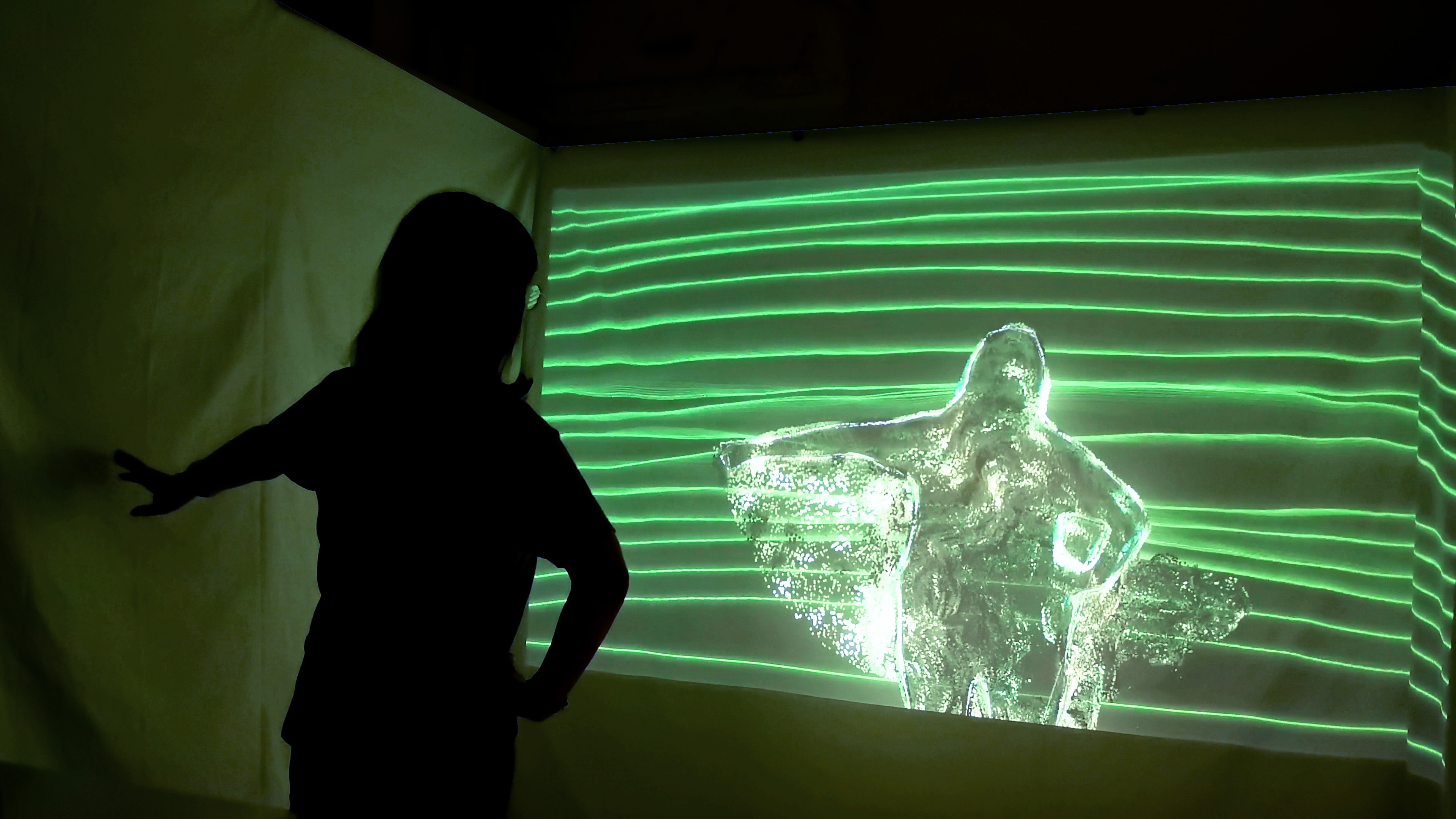

Harmonic Motion is a body-driven interactive sound system that maps ballet movements to Guzheng notes and timbres based on machine learning. By training Classification and Regression models, the system allows participants to “play” the Guzheng through ballet-inspired motion without requiring any professional background, offering a real-time audiovisual performance experience.

This project reinterprets the Guzheng as a digital intercultural instrument, merging Chinese traditional heritage with Western dance movement. Machine learning functions as an agent of creative transformation, linking physical expression, sonic design, and cultural narrative to enable new modes of performance and cross-cultural artistic dialogue through embodied interaction.

Guzheng is a traditional Chinese plucked string instrument. It has 21 strings and can generate seven notes over four octaves (Do, Re, Mi, Sol, La; Fa, and Si realized by strings pressing) with five strings in each octave. Ballet is a highly

codified Western dance organized with seven basic arm positions and foot positions to form the foundation of its

technique. Although the Guzheng and ballet arose from different cultural and historical backgrounds, they share

common structural, expressive, and rhythmic elements.

This work is a live performance experience; it does not require a professional ballet dancer to play, and the public is free to explore expressive body positions intuitively—each motion mapped to distinct tonal textures based on speed, height, distance, and fluidity of movement. The system responds organically and responsively to each participant’s unique movement style.

This work is a live performance experience; it does not require a professional ballet dancer to play, and the public is free to explore expressive body positions intuitively—each motion mapped to distinct tonal textures based on speed, height, distance, and fluidity of movement. The system responds organically and responsively to each participant’s unique movement style.

Motion-Controlled Guzheng through Ballet Vocabulary

The system integrates “Motion Capture and Processing,” “ML Training,” and “Output” (Figure 3). It begins with a Kinect

V2 sensor, which captures the skeleton and depth data of the performer in real-time and then sends the raw data to

TouchDesigner (version 2023.12370) to extract and calculate ten-dimensional parameters that describe both the position

and dynamics of the user’s body movements. Then, the real-time calculated data is transmitted via the Open Sound

Control (OSC) protocol to the next module, Wekinator (version 2.0), a real-time interactive machine learning platform, for training with input data. Based on the Classification and Regression model, the correlations between Guzheng notes

and timbre and ballet bodily movement are built. The Wekinator also connects to Sonic Pi (version 4.5.1) for real-time sonic listening and training. Once the “ML Training” is finished, Wekinator sends the synthetical model to Sonic Pi and TouchDesigner via OSC to generate the sonic-visual embodied interaction.

and timbre and ballet bodily movement are built. The Wekinator also connects to Sonic Pi (version 4.5.1) for real-time sonic listening and training. Once the “ML Training” is finished, Wekinator sends the synthetical model to Sonic Pi and TouchDesigner via OSC to generate the sonic-visual embodied interaction.